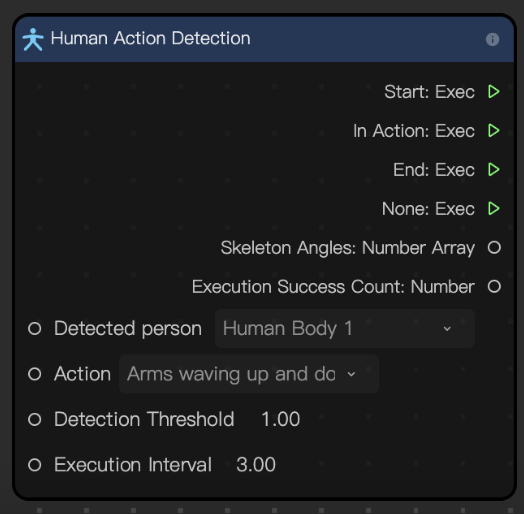

Human Action Detection

The Human Action Detection node monitors a sequence of poses to detect specific user actions. Each action is defined as a series of poses that must be completed in a specified order within a set time limit (the default is 5 seconds). If the user performs the poses in the correct order and within the time limit, the action is marked as successful. Incorrect pose order or exceeding the time limit results in a failed action.

For optimal accuracy, users should face the camera as directly as possible and use the front-facing camera of their device.

Input

| Name | Data Type | Description |

|---|---|---|

| Detect | String | The body to detect. "Body 1" represents the first person to appear in the scene and "Body 2" represents the second. |

| Pose | String | The action to be detected |

| Detection Threshold | Number | Determines how strict the detection logic is. The higher the number, the stricter the logic. The default value is 1. |

| Max Time | Number | The maximum amount of time allowed for the entire action to be completed |

Output

| Name | Data Type | Description |

|---|---|---|

| Start | Exec | Executes the next node when the specified action is detected |

| Continue | Exec | Executes the next node continuously while the action is being executed |

| End | Exec | Executes the next node once the action ends |

| None | Exec | Executes the next node if the action isn't detected in a frame |

| Repeat | Exec | Executes the next node every time an action is successfully completed |

| Skeleton Angles | Array | Outputs the angles between bones in order. Multiple bones can be combined to manually calculate the pose. |

| Execution Count | Number | The number of times the specified action is successfully completed |

How the Human Action Detection Node Works

Setup: Set the action to be detected, adjust the detection threshold, and define the maximum execution interval

Define the action to be recognized as a combination of specific poses performed in a required order. The following actions are available:

- Jump

- Left Punch

- Right Punch

- Left Kick

- Right Kick

Monitoring: The selected person’s pose sequence is monitored in real-time, checking for alignment with the specified action

Pose matching: As each pose is performed, the detected poses are compared to to the target sequence

Action evaluation: If all poses are completed in the correct order and within the specified time, the action is marked as successfully executed. If not, it is marked as failed.

Output events triggered: Output events are triggered to indicate the start, progress, and completion status of the action, enabling the application of the outputs in the application for real-time responses

Example Use Cases

Action detection can be used to recognize defined actions to trigger various interactive effects, including:

- Fitness and exercise: Detect and count specific exercises, such as squats, jumping jacks, or punches to track workouts

- Gesture-based controls: Trigger a specific effect after completing a certain action

- Fun effects: Allow users to trigger animations or effects (like confetti or sparkles) by completing an action sequence, enhancing video content with interactive elements